Hi folks, this is CCS.

Welcome to the February 03, 2026 newsletter from IAMCCS. The last weeks have been intense, dense, and honestly very rewarding: a lot of architectural work, several new workflows released, and a clear direction emerging around long-form video, audio-driven pipelines, and generative cinema as a real production practice — not a demo playground.

––––––––––––––––––––––

RECENT POSTS YOU MAY HAVE MISSED

––––––––––––––––––––––

Over the past weeks on patreon.com/IAMCCS I’ve published a series of posts that together form a coherent arc, not isolated drops.

We went deep into LTX-2 long-length Image-to-Video workflows, breaking down not just how to extend clips, but why certain numbers, overlaps, and frame rules exist. These posts include both hands-on workflows and technical reasoning (Extension Modules, frame math, overlap strategies), plus attached PDFs and diagrams for those who want to understand the skeleton of the pipeline rather than copy settings.

Some of the exclusive PDFs for Patreon supporters

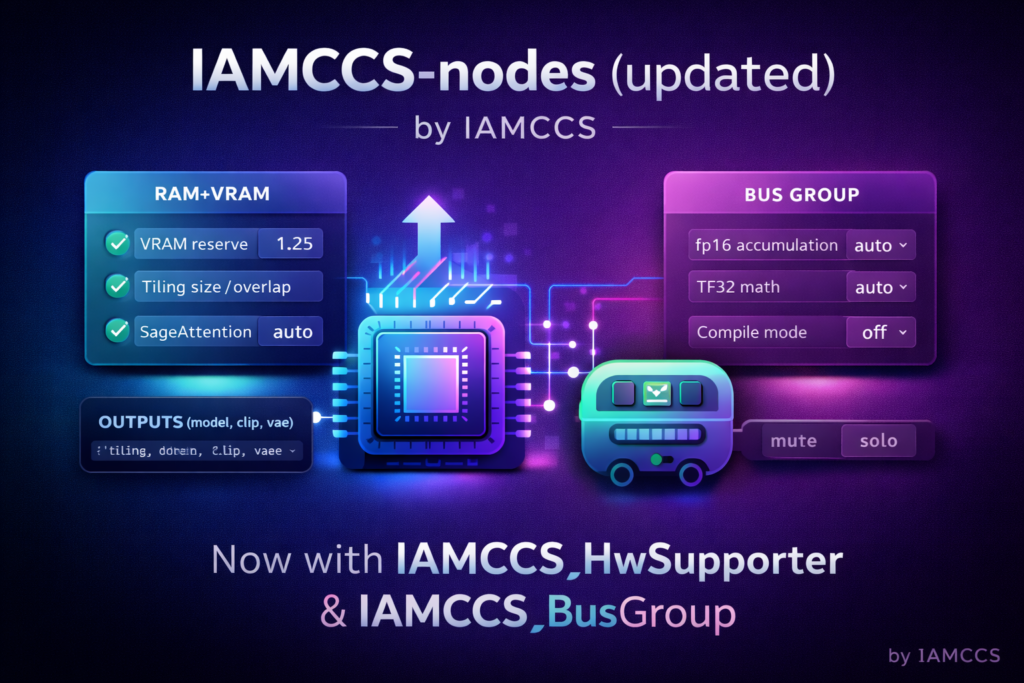

Some of the exclusive PDFs for Patreon supportersAlongside that, I released updates to IAMCCS_nodes and IAMCCS_annotate, introducing AutoLink improvements, BusGroup, HWsupporters, new LTX-2 utility nodes, and Annotate v2.0.0 — which turns annotations into a real spatial thinking tool inside ComfyUI, not just sticky notes.

https://github.com/IAMCCS/IAMCCS-nodes

https://github.com/IAMCCS/IAMCCS_annotate

We also explored WAN 2.2 long-length workflows with SVI Pro v2, including the WanImageMotion node, which addresses the infamous “fake slow-motion” effect when using LoRAs. This wasn’t a cosmetic fix: it’s a structural intervention on how motion energy is handled at the latent level.

Finally, I shared a more theoretical but very personal post, When Cinema Thinks Through Form, connecting optical imperfection, anamorphic language, and generative cinema — a bridge between filmmaking practice and AI research that sets the ground for beta LoRA and Z-Image work.

If you’re following the ecosystem closely, you’ll notice that all these posts talk to each other. That’s intentional.

Here’s the links to the newer posts:

-

IAMCCS_nodes Update: New Utilities in Town 🚀 – https://www.patreon.com/posts/iamccs-nodes-new-149700645

-

🎧 Qwen3-TTS + LTX-2: Audio-Driven I2V Workflow with Lip Sync – https://www.patreon.com/posts/qwen3-tts-ltx-2-149701660

-

LTX-2 in Practice: Image-to-Video & Text-to-Video Workflows – https://www.patreon.com/posts/ltx-2-in-image-147624793

-

No more slo-mo with WAN 2.2 Long Lenght videos with SVI Pro v2 thanx to our new IAMCCS_node: WanImageMotion! – https://www.patreon.com/posts/147670329

For Patreon supporters

-

LTX-2 I2V long lenght videos breakdown

– https://www.patreon.com/posts/149232500 -

LTX-2 Audio + Image → Video (v2): an audio-first breakthrough using RVC, Kokoro, Qwen3-TTS, ChatterBox & Maya

https://www.patreon.com/posts/ltx-2-audio-v2-149743775

––––––––––––––––––––––

AI NEWS — QUICK ROUNDUP

––––––––––––––––––––––

Over the last months, generative AI has clearly moved beyond the “wow demo” phase, shifting toward a workflow-centric culture focused on control, repeatability, and long-form production.

• NVIDIA @ CES 2026 made a strong move toward real, usable AI on personal machines: major optimizations for open-source tools like ComfyUI, llama.cpp and Ollama (FP8 / NVFP4, better memory handling, GPU token sampling), with ComfyUI workflows seeing up to 3× speedups on RTX systems.

https://developer.nvidia.com | https://blog.nvidia.com

• LTX-2 keeps proving that video generation is no longer about clips, but about systems: synchronized audio-video generation, higher resolutions, and a structure that finally makes cinematic, long-form reasoning possible in open workflows.

https://github.com/Lightricks/LTX-Video

• Z-Image-Base (Alibaba Tongyi) landed as a non-distilled, high-quality foundation model with full Day-0 ComfyUI support — slower than Turbo, but with a much higher artistic ceiling and ideal for LoRA training and serious image work.

https://github.com/Tongyi-MAI/Z-Image

https://blog.comfy.org/p/z-image-day-0-support-in-comfyui

• DeepSeek-OCR-2 introduced a genuinely new idea in vision: “Visual Causal Flow”. Instead of scanning images mechanically, the model reasons about layout like a human reader — a big step forward for documents, PDFs, and real visual understanding.

https://github.com/deepseek-ai/DeepSeek-OCR-2

• MOVA (OpenMOSS) filled a crucial gap in open source: a true end-to-end video + audio generation model, with native lip-sync and environmental sound, avoiding the fragile cascaded pipelines we’ve all been hacking around.

https://github.com/OpenMOSS/MOVA

https://openmoss.github.io/MOVA/

• Qwen3-TTS raised the bar for open-source voice: ultra-low latency (97ms), 3-second voice cloning, cross-language voices, and natural-language voice design — finally making audio a first-class, structural component of generative workflows.

https://github.com/QwenLM/Qwen3-TTS

https://huggingface.co/collections/Qwen/qwen3-tts

––––––––––––––––––––––

GOYAI CANVAS — PROGRESS UPDATE

––––––––––––––––––––––

goyAIcanvas is moving forward steadily.

In the latest internal update, I’ve been refreshing and aligning the models embedded inside the LTX-2 node logic, improving consistency between image, audio, and video stages. The idea remains the same: goyAIcanvas is not a “preset machine”, but a structured environment for thinking, testing, and building pipelines.

What is goyAIcanvas? GoyaICanvas is my personal research platform for image and video generation, built as both a ComfyUI node ecosystem and a future standalone tool.

I’m using it as the first beta tester for my own projects, and it has been a real game-changer: it dramatically simplifies my generative workflow.

It lets me focus on what I want to create, not on hunting for tools to make an idea work — images, video, audio, and timing all treated as one authored system.

I’ll be opening free beta access for my supporters very soon — likely at the beginning of March — so you’ll be able to try GoyaICanvas early, break it, test it, and help shape it with real, hands-on feedback before anything goes public.

Just a heads-up for my supporters: access to the node will be exclusive, handled through dedicated access and tokens. If you feel like supporting my work, this is how you’ll get your hands on this little gem. I’ll also keep sharing real-time progress updates in the next newsletters, so you’ll always know where things stand.

––––––––––––––––––––––

MY PROJECTS — WHERE I’M HEADED

––––––––––––––––––––––

Alongside the push for AI democratization that I’m carrying forward here on Patreon (and thank you again for the support — it truly matters), my personal projects are moving ahead in parallel. I still see AI as a tool in our hands, not something that should use us — which is why I keep working on ways to embed generative AI inside craft-driven flows, built through real brainstorming, real people, and ideas born in the human brain.

Organic stuff, first and always.

FILM

Some of you may know my first feature film, Sometimes in the Dark, which you can watch worldwide.

If you’re curious, check my website for all the links and platforms where it’s available — it’s still very much part of my ongoing dialogue between cinema, form, and experimentation.

https://carminecristalloscalzi.com/cinema/

As for film projects, I’m keeping things intentionally quiet — but early development continues on my dark-fantasy feature based on my illustrated book “Di come Edgar Letfall sconfisse l’Impostore” (soon also available in English), alongside two other projects: one fully rooted in AI cinema, and another entirely analog — a historical film developed as a Spain–Italy–UK co-production. Once those projects reach a more mature stage, I’ll start sharing more here as well.

MUSIC

As many of you know, while I strongly believe in AI cinema as a force for democratization — shifting power away from a few gatekeepers who often lack even basic cinematic literacy and putting it back into the hands of those with ideas and tools — I do not share the same view when it comes to AI music.

Music is one of the most fragile and profound human languages we have. Handing its core expressive act over to machines risks hollowing it out, turning something lived into something merely generated. For this reason, I do not use AI as a substitute for musical creation: my tracks remain fully handcrafted, from the first note to the final arrangement.

In the meantime, the first single from my upcoming EP in-die (with English lyrics) should be ready very soon — hopefully next week. After my Italian releases (you can find them on Spotify here: https://open.spotify.com/artist/4blt5ssHvlcvLFhM11vaFG), this feels like a natural next step.

The versions released on Spotify, Amazon Music, and other major platforms will be classic radio edits. The full ALBUM — including extended versions, acoustic takes, B-sides, and additional material, conceived as a real album — will be available exclusively on Bandcamp. This is a conscious choice: I no longer want to be part of the economic squeeze that streaming platforms like Spotify have imposed on artists worldwide.

In short: if you truly support my work, you’ll know where to find me.

––––––––––––––––––––––

More soon — and thanks for walking this path with me.

CCS / IAMCCS